Matchmaking has entered a new frontier in the digital world, with recent technological advancements bolstering the odds that many people will find a mate. The advent of ChatGPT means that folks on the prowl can quickly devise an eloquent script about who they are and what they are looking for to use on dating sites.

But dating, along with all other human relationships, has challenges -- and many Canadians are choosing to stay single.

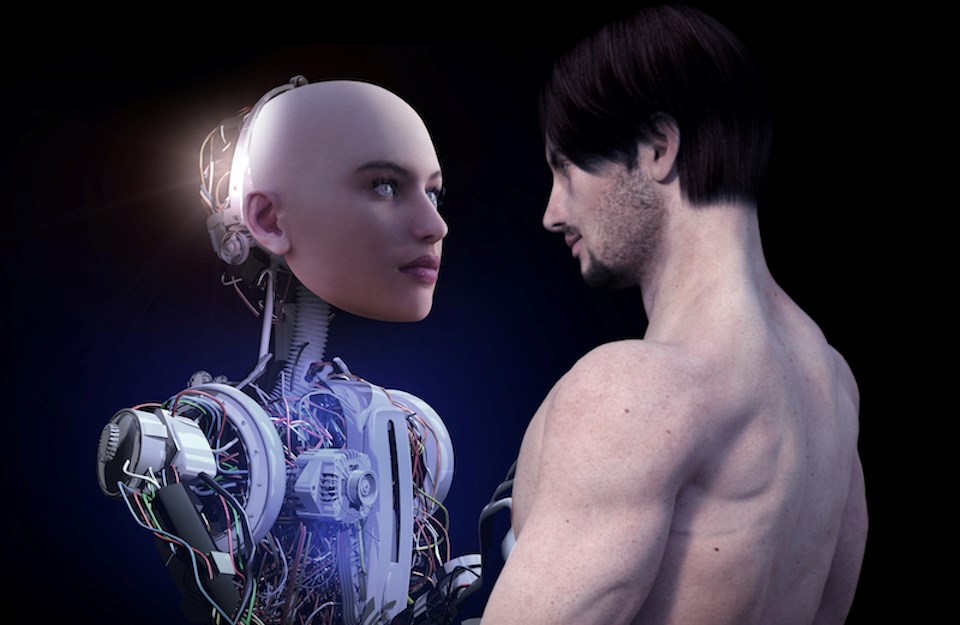

Instead, in the not-so-distant future, lonely people might find comfort in non-human, yet intelligent entities.

While they are not yet widely accessible, artificially intelligent robots are on the market, providing a range of functions. One of the most popular, albeit controversial designs, is the artificially intelligent (AI) sex or companion bot.

There are already several bots available on the market but they are a far cry from the ones depicted in popular films. As technology advances, however, many experts have cautioned about the potential moral implications of replacing humans with machines in relationships.

Dr. Madeleine Ransom, a philosophy professor at the University of British Columbia, says some people might benefit from AI relationships. But the majority of them will likely miss out on the opportunity to have a "real" relationship that will help them grow as a person.

"For most of us, I think the dangers outweigh the benefits," she cautioned, adding that all the hallmarks of a relationship would be there but there would be "nothing behind the eyes [of the bot]."

Intimate relationships require empathy and compromise, whereas one with a bot only requires the user's input. In other words, a person can create the exact kind of partner that they desire, without having to worry about changing themselves.

"In my mind, this is a catastrophe for human growth," the professor told V.I.A., noting that individuals may eventually prefer to spend time with their AI bot over other people.

"It's a recipe for narcissism, and we already have enough of that."

Would having sexbots create a safe space for sexual deviance in society?

Some advocates believe that providing AI sexbots to people with deviant or illegal desires may deter them from targeting humans. But other people contend that providing an outlet for these behaviours may have the opposite effect, leading to an increase in desire or scope of stimulation.

Ransom, who has written about human relationships with sexbots and carebots, worries about the objectification of women in the creation of sexbots. Even AI assistants, such as Alexa and Siri, have female voices and take on a subservient role, Ransom pointed out.

Throughout history, men have obsessed over creating the "perfect woman," with recent science fiction blockbusters featuring seductive androids and AI in Ex Machina and Her. Whether it is an unattainable body or a disembodied voice programmed to fulfill every emotional whim, these creations threaten the role of women in society, Ransom explained.

"This desire for men to create the perfect woman has been on the table for a long time. It could do a lot of damage to genders and relationships," she noted.

During the pandemic, a sex doll delivery service in the Lower Mainland started renting out its products. The operator told V.I.A. that people paid more for dolls that looked like celebrities, and most of them have dramatic physical proportions that human women can't attain.

While AI bots don't have to be designed as women or to fulfill sexual desires, they remove the "human" component from relationships, jobs, and many other facets of everyday life.

And as they become increasingly intelligent, experts have also raised questions about their "rights" as potentially sentient beings. Vastly different than a sex doll or pleasure device, bots created to fulfill emotional relationship vacuums require a sophisticated scope of tools. The evolution of these capacities could create something akin to human consciousness and it may be difficult, if not impossible, to determine when that will happen or even if it already has.

For now, Ransom is more concerned that people will attribute consciousness or human emotions to a chatbot or other AI form before sentience occurs. Programmed to recognize patterns and evolve, AI may appear to have human characteristics when it is simply performing its function.

A few people have argued that some AI has already gained a form of sentience but "this is implausible at this stage," she noted, adding that it is also possible that the technology will never gain consciousness.

"At this stage of the technology, chatbots are stochastic parrots, they are just making predictions about what word will come next."

How will people know when AI becomes sentient?

If it becomes self-aware, AI poses several moral challenges to society. But there aren't any fool-proof metrics for determining when something becomes conscious, particularly when it was created by humans.

"The bottom line is we don't understand how sentience emerges," Ransom underscored, highlighting the concern that people are more likely to misattribute awareness to sophisticated bots.

Chatbots have successfully passed the Turing Test -- a test developed by mathematician Alan Turing in the 1950s to determine if a being has sentience -- but scientists are still "reasonably sure" they aren't sentient. The test posits that if a machine can "pass" as a human, meaning that it can fool a person into thinking it is one of them, that it has developed consciousness.

But there are other ways people conceive of sentience. Animal rights groups have long argued that non-human, biological life forms deserve similar, if not the same rights, as people because they experience pain, fear, and have desires. Using this logic, AI would likely fail, since it isn't a biological organism that can be measured to have these experiences.

"It's highly doubtful that [AI] beings have the capacity to feel or suffer based on the fact that most of them are not embodied. They don't have nerve centres that could be damaged...they don't have pain receptors," explained Ransom.

Another measure of consciousness is self-awareness, or the ability to contemplate one's own thoughts. For example, a person can think "I'm cold" and then ponder why they are cold, she noted.

"We have this ability to sort of take our own objects of thought as these sorts of objects in their own right that we can think about. And so here, it's probably more conceivable that systems in the future AI systems will be able to do something like that and then the question is, is that sufficient for consciousness?"

If AI arrives at this stage, people will need to ascribe rights commensurate with the type of beings that they are. Since they aren't biological, for example, they won't get tired or hungry, so depriving them of food or sleep wouldn't be unethical in the way that it is for animals.

For now, however, Ransom underscored that "humans have a tendency to over-ascribe agency" and the conversation about companion bots in society should focus on the rights of people rather than artificial systems.

"There's a real danger here for gender relations in the future."